Different Game Genres Enhance Real-Life Abilities

As the boundaries between the digital and the real world increasingly blur, the impact of the former on marketable skills is becoming more significant. Digital games today are not merely entertainment. They are also potent educational tools. Educators have even created a word for this method of learning. It is called gamification, which is defined as using games to improve learning engagement, problem-solving, and teamwork. Gamification is being integrated into education, healthcare, scientific research and business. This article explores how different game genres and specific games can enhance real-life skills.

Strategic Thinking Through Real-Time Strategy Games

Real-time strategy (RTS) games represent a genre that require players to juggle multiple tasks simultaneously under pressure. These games are ideal for developing and honing strategic thinking because they simulate complex environments where decisions may have immediate or long-term consequences. Players learn to manage resources and strategies in real-time, offering a perfect analogy for similar real-life strategic challenges.

Two RTS game examples are StarCraft II and Age of Empires. Both offer complex, multi-layered gameplay requiring quick thinking and strategic foresight. They leverage advanced AI and real-time processing to create challenging scenarios. Players face AI opponents who can rapidly adapt to strategies thrown at them. For the players, not the AI opponents, it is a dynamic environment to develop critical thinking and adaptive strategies.

The skills learned in playing RTS games transfer well to the real world of business management, military strategy and crisis response. Players lmanage resources efficiently, anticipate opponents’ moves, and make quick decisions.

For a practical application within a fantasy realm, RAID: Shadow Legends offers an excellent example of an RTS game that focuses on strategic resource management, and through onscreen tactical combat serves to enhance planning skills.

Problem-solving and Puzzle Games

Puzzle games are uniquely designed to challenge our intelligence. They make players approach problems from different angles, fostering mindsets that are both flexible and analytical. Two examples of puzzle games are Portal and Tetris Effect. Both use advanced algorithms in puzzles that require logical reasoning, spatial awareness, and pattern recognition. They employ physics-based mechanics that entertain, educate, and improve mental capabilities.

Playing puzzle games enhances one’s ability to analyze data, structure projects, and navigate complex issues in professional settings. The cognitive skills learned are especially useful for real-world fields requiring high levels of analytical thinking and innovation including engineering, computer programming, and scientific research.

Role Playing Games and Social Skills Development

Massively multiplayer online role-playing games or MMORPGs are virtual playgrounds where players interact in persistent and expansive worlds. These games are powerful platforms that mimic the complexities of real-world social interactions. Participating in digital societies develops interpersonal skills that are transferable to the real world. Two examples of these games are World of Warcraft and Eve Online. Both create social communities where players collaborate to achieve common goals. These games have been able to achieve their success because of the evolution of high-speed, high-bandwidth networks that facilitate real-time communication and interaction for thousands of players to engage each other worldwide. Through these types of role-playing games set in virtual worlds and societies, players learn to negotiate, collaborate, and lead, providing a training ground for managing real-life group dynamics.

Shooter Games Teach Quick Thinking and Reactions

First-person shooters or FPS games are high-octane, demanding virtual worlds where players make rapid decisions and hone their psychomotor skills. FPS games’ immersive and fast-paced nature is ideal for developing critical skills associated with high-stress jobs. Call of Duty and Overwatch are two fast-paced demanding action games that improve hand-eye coordination, reflexes, and split-second decision-making. They use high-frame-rate graphics and responsive control systems to simulate real-time engagements. They have practical applications for jobs like driving, piloting, and medicine.

Sandbox Games Encourage Creativity

Sandbox games feature open-world experiences where creativity is encouraged and required. These games are about exploration without defined goals. Players can manipulate the virtual environment and create complex structures and systems from scratch. They serve as a canvas for innovation and experimentation, providing players with the tools to think creatively and problem-solve. They feature open-ended play. Minecraft and The Sims are these types of games, digital sandboxes that allow players to test and implement ideas without real-world constraints.

Narrative Games Improve Emotional Intelligence

Narrative games are about storytelling. They promote character development and offer players a deeply emotional and ethical landscape in which to play. They feature situations where characters face difficult decisions, mirroring real-life scenarios that require empathy and emotional insight. The Last of Us and Life is Strange are two narrative games that challenge players to make moral decisions, build relationships, and enhance empathy and emotional intelligence. These can be invaluable in professions requiring strong interpersonal skills and emotional insight such as teaching, counselling, and team management.

Gaming as Advanced Learning Platforms

In our technology-driven world, where digital literacy and cognitive flexibility are paramount, the role of gaming extends beyond entertainment to become a crucial part of personal and professional growth. As games continue to evolve along with high-speed, high-bandwidth networking and telecommunications the potential for them to serve as advanced learning platforms grows.

Technologies like virtual reality (VR), augmented reality (AR), and artificial intelligence (AI) are now part of the gaming world, enhancing immersive experiences and offering new ways to learn. No longer are video games just escapist entertainment. They are transformational environments for skills development. For educators and trainers, games provide simulated challenges that resemble real life.

Imagine as feeds and speeds continue to accelerate, what the world of video games will be like a decade from now. Imagine how this technology will change how we learn and work. It will have elevated that art beyond the world of Pong and Monday Night Football.

]]>Dijam is co-founder and COO of GridRaster, a company well-versed in developing augmented, virtual and mixed realities for industrial and commercial applications. GridRaster provides cloud-based augmented and virtual reality (AR/VR) platforms and experiences for mobile devices and computing environments.

The world of digital twinning is no longer in its infancy and immersive technologies that use AR/VR headsets are making aerospace, defence, transportation and manufacturing companies integrate mixed realities into their planning, product development and production processes.

In this posting, Dijam introduces Apple’s new Vision Pro headset and how it will help manufacturers and engineers leverage the Metaverse. The Metaverse is considered by many to be the next evolution of the Internet. So please enjoy and feel free to comment.

Manufacturing is embracing new technologies. While the Internet and mobile technology have played a major role in this evolution, Apple’s release of the Vision Pro headset combined with the Metaverse represents a promising and elevating experience for manufacturers.

Equipped with two high‑resolution main cameras, six world‑facing tracking cameras, four eye‑tracking cameras and a TrueDepth camera, plus multiple sensors, a LiDAR scanner and more, the Vision Pro provides innovative features to distinguish it from other AR/VR headsets and gives manufacturers an effective immersive tool.

The Vision Pro uses finger, hand gestures and voice commands. It is equipped with two displays, one for each eye with a combined total of 23 million pixels. The visual experience is nothing short of extraordinary. With a custom 3D lens, the user interface remains consistently visible, complemented by features such as high dynamic range (HDR) depth, colour and contrast to enhance image quality.

Does that make Vision Pro just a better virtual gaming tool, or has it been designed to revolutionize manufacturing?

Manufacturers, Vision Pro and the Metaverse

Manufacturers today can leverage the Metaverse and build digital twins to create greater efficiencies in their operations. Vision Pro can make this even better.

The Metaverse is evolving. The virtual shared digital universe where users interact with each other in a computer-generated environment has taken off and is in use to create virtual retail spaces, warehouses, and manufacturing facilities.

Digital twins inhabit the Metaverse. They are digital representations or replicas of real-world entities or systems. Digital twins include physical objects, processes, and people. Using data from sensors, Internet-of-Things (IoT) devices, and other sources, digital twins simulate reality

Vision Pro features are a perfect companion for the Metaverse. The spatial computing capability of the technology lets workers overlay digital twin information, such as instructions, diagrams, or 3D models, onto reality. This makes for more efficient operational processes providing visual cues, step-by-step guidance, and real-time feedback. Maintenance tasks are streamlined. Technicians access digital manuals, diagnostics, and remote expert support hands-free. Overall productivity improves and downtime is reduced.

Digital twins provide real-time visibility into inventory, production, and distribution. They identify bottlenecks, optimize logistics, reduce new operational startup time, enhance virtual prototyping, and accelerate product testing and design. The result is faster time-to-market at reduced cost.

In the retail space, the Metaverse using Vision Pro becomes a world of virtual stores and showrooms enhancing the customer’s experience, allowing for exploration and interaction with products in the virtual space and driving online sales.

Manufacturers can use Vision Pro in the Metaverse for employee training simulations especially where complex machinery is involved. This can improve employee skills and enhance safety. It also enables opportunities to create virtual workspaces across a wide geography, promoting collaboration and communication among even remote team members. Manufacturers can also leverage digital twins when physical presence is challenging.

3D & AI in Immersive Mixed Reality

One of the key requirements for mixed reality applications in the Metaverse is precisely overlaying an object in the physical world. This provides a visual presentation and work instructions for assembly and training. In the case of manufacturing overlaying objects with a digital twin can catch any errors or defects. Users can track objects and adjust renderings as work progresses.

Most on-device object tracking systems use 2D images or marker-based tracking. This severely limits overlay accuracy in 3D because 2D tracking cannot estimate depth with high accuracy, and consequently the scale, and the pose. This means even though users can get what looks like a good match when looking from one angle or position, the overlay loses alignment as the user moves around in 6DOF.

Not familiar with the term 6DOF. Machines or motion systems on the drawing board are designed to move a certain way but may move differently in the real world. These six types of motion are linear, horizontal, vertical, pitch, yaw and roll. Only 3D digital simulation captures all of them. When you add deep learning-based 3D artificial intelligence (AI), users can identify objects of arbitrary shape and size in various orientations in the virtual space and compare them with real-world counterparts.

Working in the Cloud Environment

Technologies like AR/VR have been in use for several years. Many companies have deployed virtual solutions where all the data is stored locally. This severely limits performance and scale for today’s virtual designs. It also limits the ability to share knowledge between organizations which can prove critical in designing new products and understanding the best way for virtual buildouts.

To overcome these limitations companies are turning to cloud-based (or remote server-based) AR/VR platforms powered by distributed architecture and 3D vision-based AI. These cloud platforms provide the desired performance and scalability to drive innovation in the industry at speed and scale.

Vision Pro and its successors are among the technologies shaping the Metaverse in the present and future. New extended reality devices will continue to emerge such as brain-computer interfaces. The cloud will continue to grow. We are still at the very beginning of the age of computing barely three-quarters of a century old.

The human digital experience, therefore, remains at an early stage as we enter an alternate to the physical Universe, the Metaverse of our creation. So prepare to have your mind blown.

]]>

So many today fear where technologies like artificial intelligence (AI) and robotics are heading. Some even see mass unemployment should these technologies triumph replacing the human workforce. Others welcome the idea of using AI and robotics to lessen the burden of work leading to four-day work weeks. Others see the technologies as helping them to become unshackled from routine releasing their more creative selves.

But I will leave it to Katie to give you her insights into the ongoing technology revolution.

Technology changes business causing reactions ranging from amazement to fear. Businesses become more efficient, accurate, and capable of delivering products and services previous generations would not have imagined. At the same time, many fear losing jobs as they get replaced by machines.

Managing and implementing technology properly helps organizations reap the benefits while avoiding pitfalls. When done right, technological innovations create competitive advantages and increase revenue and profitability.

So what are these technologies that are revolutionizing business?

Artificial Intelligence

Ever since ChatGPT was released at the end of 2022, it has been hard to be online and not see something about the impact of this particular type of AI as well as others.

Speaking of ChatGPT, it is a generative AI large language model (LLM). Today it and competitors are creating written content and images, taking on online customer service queries, populating help desks and more. Companies are flocking to it to try and see what it can do for them. ChatGPT and other AI software are analyzing data at scale and starting to impact almost any area of business. Where is it all going?

As generative AI gets better at responding to humans and sounding like one, there will be pressure for writers and other creatives to add value. Organizations will be asked to stop doing things as they have been done in the past. More will focus on creating an innovation culture using AI to help with outside-the-box thinking. With the world only recently having shaken off COVID-19, new existential challenges are current and on the horizon. There is no time for businesses to be complacent.

AI analytics will help companies to optimize operations and will become increasingly granular, able to make suggestions in real time to employees wherever they are in the organization and whatever they are doing. Workers will come to rely on AI insights just as much as they might a skilled coworker. The goal will be to improve performance across the board.

Robotics and Automation

We’ve come a long way from the Ford-style production lines of the early 1900s. Nowadays, robotic arms are used to manufacture complex pieces of machinery too large and too complex for human beings to assemble by themselves. Increasingly, these machines are autonomous, meaning no human operators are needed.

Robots are integrating AI and a particular form of it, machine learning, making them deployable in an increasing number of scenarios. And robots are getting smaller and more agile. Many of Amazon’s warehouse tasks are now being carried out by robots working side-by-side with human handlers.

Of course, robots are not always physical. Today, we have automated chatbots beginning to provide online customer service. These software robots learn and adapt as they are exposed to more data. Soon, help desks and online chat may require very little input from human handlers.

Virtual Reality in Business

When someone talks about virtual reality (VR), many think immediately about the headsets worn by VR gamers. VR, however, isn’t just for playing games and is already being used in businesses for entirely different purposes such as digital twin workspaces created in the metaverse. VR is being incorporated into organizations to bring together remote and hybrid teams and improve collaboration. A company’s digital twin that runs in parallel to the bricks-and-mortar business can serve many purposes. It can expose gaps in operations that can be closed first in the digital and then in the real world. It can bring people from remote physical addresses to interact within the digital space.

VR can play a critical role in training particularly where there is a degree of physical risk or where tasks in the real world for training purposes would be very expensive. This is particularly true for companies supporting the military. A good example is an American technology-focused defence and security engineering company called Parsons which uses VR to train employees on hazard identification strategies without exposing them or the environment to the real thing.

According to Verizon, organizations such as Meta, Microsoft, and Amazon have created new-hire training using VR to help educate people about the culture and requirements of their workplaces and to allow new coworkers to meet in virtual company environments.

Avoiding the Pitfalls of New Technology

While new technologies offer many benefits, organizations need to plan their implementation. Otherwise, there can be significant setbacks that can hurt a company’s workforce, revenue, and profitability.

For example, many industries already have legacy technologies in place that can be challenging to replace all at once. Business leaders need to have patience and work incrementally to minimize downtime, workflow disruptions, and security concerns.

It’s also important not to lay off employees and replace them with technology that isn’t capable of doing their full jobs, which is something American media company Gannett found out the hard way when the newspaper chain tried to use an AI tool to write high school sports dispatches. While AI technology can do many things that humans can do, without the latter’s oversight mistakes will happen.

]]>

Before posting Katie’s article, I sent it to my good friend, Dan Thompson. Dan has lived with quadriplegia since his teenage years when he severely damaged his spinal cord in a car accident. I asked Dan to review the article and he called it a good general overview.

Dan did tell me that his personal experience in using online booking for hotels has not always provided him with accessibility leaving him to sleep in his wheelchair. So as much as technology is making the playing field more level for those with disabilities, not everything works according to plan.

I hope you enjoy Katie’s contribution here and provide comments.

Millions of people around the world live with disabilities. So why is accessibility severely lacking? For instance, those in wheelchairs, and individuals who are blind or deaf continue to bring to light how self-checkout systems in stores cause them to struggle. And the Internet isn’t proving to be any more disability-friendly than stores. A WebAim report on accessibility of homepages recently revealed that “across the one million home pages, 50,829,406 distinct accessibility errors were detected—an average of 50.8 errors per page.”

In various industries, however, accessibility is improving. So let’s look at where and how the technology world is addressing accessibility.

The Metaverse

If unfamiliar with the metaverse, it’s a 3D-enabled digital space where people socialize, learn, and play by way of virtual reality (VR), augmented reality (AR), a high-speed internet connection (preferably 5G), and other high-tech tools. This space is contributing to increasing accessibility because those with various disabilities can enjoy it to experience what it is like to live without their disability.

Users with physical impairment can engage in the metaverse. For example, someone living with arthritis and chronic pain, which prevents them from being active, can create an avatar in the metaverse and engage with people all over the world without leaving home.

Individuals living with vision, hearing, and cognitive challenges can now use high-colour contrast choices, subtitles, captions generated in real-time, and 3D-audio echolocation to help them navigate the digital world. In this way, the metaverse is allowing people to be who they want to be, their authentic selves.

[Editor’s Note: When Dan and I were working together, we paid a visit to an early-stage augmented reality developer who allowed Dan to experience the feeling of walking through fall foliage with the dry leaves brushing his digital feet. He also was given wings to fly. That was happening well before Second Life, Facebook and now Meta.]

Assistive Tool Compatibility

“More than 2.5 billion people need one or more assistive products, such as wheelchairs, hearing aids, or apps that support communication and cognition,” according to the WHO-UNICEF Global Report on Assistive Technology (AT).

That’s a lot of people and a healthy market for technology companies to address. That’s why the industry is creating products that are compatible with the aids used by people living with specific disabilities. These include:

- Braille e-readers

- Voice assistants

- Assistive keyboards

- Text-to-speech systems

- Screen reading software

- Accessible Rich Internet Applications (ARIA)

- Word predictive software

A great example of this push for assistive device compatibility in the tech industry is The Speech Accessibility Project, a partnership that includes Amazon, Apple, Microsoft, Meta, Google, and the University of Illinois. They’re working to make voice recognition technology more useful for those with varying speech patterns and disabilities.

[Editor’s Note: Speech recognition is an area where I gained considerable experience, having worked on several projects in the early 1990s when the technology was in its infancy. That’s where I met Dan who used voice recognition to make it possible for him to work with computers.

Dan suggested that word prediction software which is a feature of G-mail and other e-mail systems, has been of great assisstance to people with disability who are challenged by computer keyboards. A feature that anticipates words that follow a word you type can dramatically speed up written communication for those with a disability which makes keyboarding a challenge. In addition, features like autocorrect in applications like Microsoft Word can be of considerable help.]

Advancements in Tourism

Tourism has been focused on accessibility longer than most other industries. The following list shows where improvements are being made.

- In-person accessibility – Airports, transportation services, hotels, and popular tourist destinations like theme parks today are more accessible than ever. These services and places provide physical, sensory, and communication access for the disabled including accessible restrooms, elevators, and wide doorways. Destinations use audio guides and sensory-sensitive lighting and sound systems to accommodate related disabilities. Staff members at tourist destinations and in many hotels are trained to be fluent in American Sign Language (ASL). A recent survey ranked Las Vegas, New York, and Orlando, Florida as the most disability-friendly cities in the United States. Many Las Vegas shows offer audio descriptions. New York businesses are implementing permanent and portable ramps. And Walt Disney World offers disability-accessible services which can be viewed on their Florida website.

- Travel, hotel, and hospitality websites – Many travel, hotel, and hospitality websites today prioritize guests with disabilities by providing accessible rooms that can be requested through online booking. Other features of these sites that make it easier for those with disabilities include:

- Simple navigation

- Text-to-speech readers

- Digestible content

- Easy access to customer service

- Enabled keyboard navigation

[Editor’s Note: I wonder how much artificial intelligence (AI) tools like ChatGPT will contribute to levelling the playing field for those with disabilities. I suspect it will happen sooner than later considering the rapid evolution of the technology. This would be a good subject for a future posting here at 21st Century Tech Blog.]

]]>Jean Lamoureux, the hospital’s Executive Director states, “The number of requests for mental-health consultations is estimated to have increased by 30 to 40 percent during the pandemic. These needs are urgent…and, thanks to the innovation of Paperplane Therapeutics and TELUS, we will transform the way health services are delivered, while having a significant positive impact on patient well-being through technology.”

Telus is one of Canada’s big three in telecommunications. The company has taken on an active role to address mental health in its workplace and to help its customers and Canadians in general.

Paperplane Therapeutics is a designer of therapeutic virtual reality video games. These games are designed to help patients with the pain and anxiety that often accompanies illness, surgical procedures and treatment.

Previous to the Alma Hospital announcement, Paperplane had successfully demonstrated its technology with CHU Sainte Justine and the Shriners Hospital for Children in Montreal. The success of the company’s program called DREAM proved that virtual reality can change a patient’s perception of pain. Dr. Bryan Tompkins, a Shriners Hospital pediatric orthopedic surgeon described the positive impact on children undergoing medical procedures noting that the use of VR helped them to “enter a calm, imaginary world.”

We know that children take to technology readily. VR is no exception. But what about adults? That’s the focus of Alma Hospital’s new program which is integrating immersive VR across many of its departments and practices. The addition of VR is seen as an effective tool for cognitive behavioural therapists who can now use it with adult patients as well as children to help them learn to deal with anxiety.

States Dr. Jean-Simon Fortin, CEO of Paperplane, “The virtual reality therapy that we offer places the patient in a calm and soothing world where they’re prompted to engage in deep breathing exercises, guided by a sensor that we’ve added to the virtual reality headset. This system combines two effective non-pharmaceutical approaches to reduce pain and anxiety, increase patient satisfaction, and make health care professionals’ work a bit easier.”

Dr. Luc Cossette, Head of Psychiatry at Alma is optimistic that the use of VR will lessen the need to prescribe drugs for pain and anxiety. In the hospital, Paperplane’s VR will be combined with a new device aimed at treating severe depression using magnetic stimulation. The hope is to deploy Paperplane hospital-wide for treating social phobias, and specifically in oncology to help with pain management during chemo and radiation therapy.

What is the role of Telus in the Alma Hospital Project? The telecommunications company will implement 5G throughout the hospital for optimal feeds and speeds for the VR technology from Paperplane which the company describes as purpose-built and clinically validated VR for cognitive behaviour therapy, useful as a standalone method of treatment or in combination with medications in clinical settings.

]]>Technology is rapidly changing the way we work and learn. For educators and students, it is an avenue for improved engagement and interaction providing students with new ways to learn and grow whether through online learning, virtual reality, educational apps and adaptive learning software.

In this post, we explore how technology impacts student engagement and success and discuss potential benefits and drawbacks. We include some of the latest research and case studies in the field and consider what the future of learning will be like as new technological innovations are invented.

How Technology Impacts Student Engagement

In 2010 Apple published research on how technology could support learning for students with sensory and learning disabilities. This inspired me to look at how this could relate to any student engagement focused on collaboration and communicating among classmates and teachers in new ways. The following lists six ways technology is changing the learning environment for students.

- Improving Motivation – Interactive and multimedia-rich learning experiences, such as simulations, games, and virtual reality, are making learning fun, engaging and more participative in classroom settings.

- Encouraging Collaboration – Educational technologies can facilitate communication and collaboration among students and teachers using online resources such as discussion forums, video conferencing, and document sharing. This creates a sense of shared community and teamwork.

- Personalizing the learning experience – Adaptive learning software allows students to learn at their own pace which helps tailor the educational experience to their needs and abilities.

- Increasing Information Access – Technology has made access to information ubiquitous. Where textbooks have increasingly become prohibitively expensive, the onset of e-books, video, and interactive multimedia content is breaking down the economic barriers to make learning universal regardless of where you live and what you can afford.

- Improving Assessment – Teachers can benefit from educational technology using it to track a student’s understanding and progress, do interactive assessments, quickly identify areas where a learner is struggling and provide instant feedback and immediate additional support.

- Creating Flexible Learning Environments – Learning with technology is no longer limited to a brick-and-mortar classroom. Online and blended learning options allow students to learn anytime and from anywhere. This is particularly beneficial for students unable to attend traditional classes such as those with physical or learning disabilities or students in rural or remote settings.

How Current Technology is Enhancing Learning

The arrival of smartphones and tablets has had a revolutionary impact on communications with opportunities to change how we learn. Mobile devices not only put information resources in our hands but give us access to a growing body of educational apps for students and teachers.

What are these?

- Learning management systems (LMS) are software platforms that allow educators to create, manage, and deliver online course content.

- Gamification employs gaming and game design elements in a non-game learning context.

- Adaptive Learning is software designed to help meet the individual needs of learners.

- Online Tutoring provides real-time instruction and feedback using human resources that can be accessed through the Internet.

- Mobile Learning is more than learning apps that can be accessed from a mobile device. It also considers the aspect of mobility as a methodology for learning experiences beyond the physical classroom

- Social and Collaborative Learning occurs through interaction with others in online communities or collaborative learning environments.

- Simulation and Modelling use programs to help students understand complex systems and compare the real world to computer-generated scenarios.

- Robotics, Artificial Intelligence (AI), and the Internet of Things (IoT) are technologies that can be used to enhance learning delivery to students. And despite the latest controversy over AI, with ChatGPT, the positives of using these technologies in education outweigh the potential negatives.

- Virtual and Augmented reality are two immersive technologies that can be useful teaching aids. And like gamification, their use can motivate students who would normally struggle to learn using traditional classroom techniques and settings.

A Final Word

Technology has already had a significant impact on student engagement and success. It is making interactive and personalized learning experiences possible. It is improving communication and collaboration among students and teachers. It has made education more accessible

It is important to note, however, that technology works best when combined with traditional teaching methods rather than as a replacement.

In the Global South, the biggest challenges are technology accessibility and affordability. But the deployment of worldwide mesh networks like SpaceX’s Starlink and the increasing availability of inexpensive mobile devices will contribute to levelling the learning playing field this decade.

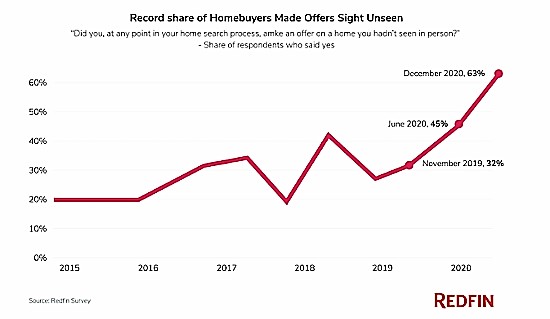

]]>Let’s first look at the growth of computer-based and online video gaming. Just how many gamers are there today? Active gamers continue to grow. By 2023 over 3 billion will be playing online games.

And according to Mike Allen at Axios, gaming is on course to move to the centre of the entertainment and pop culture universe this coming year. Today the industry does U.S. $184 billion. The growth of online gaming is one of the reasons Facebook has turned itself into Meta in an attempt to dominate a near-future virtual world. It is why streaming services like Netflix, HBO and others are adding video gaming options for customers. Gaming has become multi-generational. The parents of today were the children in the 1980s who were the first gamers. And they have passed along their interest to their children.

And according to Mike Allen at Axios, gaming is on course to move to the centre of the entertainment and pop culture universe this coming year. Today the industry does U.S. $184 billion. The growth of online gaming is one of the reasons Facebook has turned itself into Meta in an attempt to dominate a near-future virtual world. It is why streaming services like Netflix, HBO and others are adding video gaming options for customers. Gaming has become multi-generational. The parents of today were the children in the 1980s who were the first gamers. And they have passed along their interest to their children.

Where Gaming is Headed

The COVID-19 pandemic may have proven to be the best thing that ever happened to video games as people found themselves isolated and using the Internet to stay connected to friends, business associates, and family. Playing online interactive games helped pass the time for so many.

Not for me. I haven’t played video games for quite a while. During the pandemic, I have been reading lots of books and magazines, researching and writing articles like this, watching lots of movies, streamed media, and television, and doing crossword and jigsaw puzzles. My first exposure to gaming began with Pong, Space Invaders, and eventually Flight Simulator. I must admit I enjoyed the latter two. But when we got a promotional gift of an X-Box and tried the games that came with it, I just couldn’t get into them and quickly lost interest. The X-Box has long departed.

The same was true for me in trying virtual reality environments like Second Life. It never invoked much excitement. So I am a dinosaur when it comes to the world of gaming. That doesn’t mean, however, that I don’t see the merits of gaming.

The technology behind video games continues to evolve both in hardware and software. Players today compete in real-time across the world. Artificial intelligence (AI) entities have joined with human players adding a whole new dimension to the gamer experience. And game designers are increasingly using AI to enhance their offerings.

Gaming is also revolutionizing education. Video games were first introduced into classrooms in the 1980s. I remember titles like Oregon Trail, Where in the World is Carmen San Diego? and Reader Rabbit, the latter was particularly popular with my daughter.

Gamification is a descriptor for how gaming elements and principles get used in education whether in classrooms or on the shop floor. Minecraft, Kahoot, Quizzizz, and ClassDojo are free gaming environments that can be used by parents, teachers, and employers to gamify learning experiences. And gameplay describes a brain process by which players enhance their critical thinking, planning skills, collaboration and communication through the playing of video games.

So although I may not be the quintessential gamer, I recognize the value that video games bring to our world. Now if we can only get young boys and increasingly girls out of basements and outside to appreciate what is in the real world, I will be that much more accepting.

Online Betting is Another Matter

Online gaming and betting have grown hand in hand, and it seems this year has turned sports into nothing more than a place for online bets.

I remember when betting was bingo at the local church or synagogue, poker nights, mah jong tournaments, horse and dog races, bricks-and-mortar casinos, and government lotteries. But now with the Internet combined with the COVID-19 pandemic, gambling has increasingly taken over the virtual world.

There has always been betting on sports. And in the past betting was illegal. That’s why we had behind-the-bar bookies. And there were scandals. Baseball had the Black Sox World Series fiasco. The NBA in the early years saw players accused of shaving points for bookies. Boxers took dives so gamblers could get big paydays. And half the Irish Sweepstakes tickets ever sold were counterfeit.

But today gambling is legal and ubiquitous. This season broadcasts of football games whether Canada’s CFL or the U.S. NFL, are preceded by betting line discussions. Who will win? What will be the over-under, the point differential? What players will pass, run and catch their quota of yards? I used to enjoy the talking heads that preceded football games. Not anymore, because now the discussion is less about analysis and more about the odds.

As I previously stated, gaming and gambling are inextricably linked because both have grown on the Internet. Today, online gaming is popular and even online gamers are bet on. Our smartphones provide access to thousands of gaming apps offered by Apple and Google’s online stores. Placing bets has never been easier. And as one recent television commercial describes it, our world is just “a series of bets.”

Just how big is the online gambling world, and how much bigger is it going to get? Worth U.S. $57.54 billion in 2021, it is expected to expand at a compound annual growth rate of 11.7% from today to 2030. And along with this growth has come a parallel increase in cybercrime. Then there is the rising addiction to compulsive gambling. And as we continue to be preyed upon by “legal” bookies and enticed by games of chance that once were the domain of mobsters, we are all being gamified.

]]>He stated, “What the metaverse is to us at GridRaster is an augmented space that can be overlayed on the real world to create close cooperation between humans and machines to help build and maintain almost anything. It could be a metaverse that lets humans work through robots in remote places like Mars or the Moon, or on a factory floor, skunkworks, or design studio.”

This is far from my initiation to Second Life, the first metaverse experience I ever tried. At the time after virtually flying and interacting with other avatars, I was largely unimpressed. There was a potential to use the metaverse environment to enable people with disabling physical injuries, but for the average person, I saw it as being more of a gaming platform for those with too much time on their hands.

But as Dijam and I talked he explained how the convergence of a range of 21st-century technologies was going to make the metaverse a powerful tool for companies. He was talking about combining artificial intelligence (AI), robotics, augmented and virtual reality (AR/VR), and additive manufacturing (3D printing). He believed that the impact of all of these under the metaverse umbrella would be felt in every business operation from design to the factory floor, to warehousing and to logistics. The metaverse, he stated, was going to overlay our physical world in ways never contemplated before.

Why is Dijam such a metaverse believer?

Let’s start by describing what GridRaster has developed. Go to the website and it describes the technology as follows:

“a unified and shared software infrastructure to empower enterprise customers to build and run scalable, high-quality eXtended Reality (XR) – Augmented Reality (AR), Virtual Reality (VR) and Mixed Reality (MR) – applications in public, private, and hybrid clouds.”

What does that all mean?

Simply, GridRaster creates spatial, high-fidelity maps of three-dimensional physical objects. So if you plan to build an automobile or aircraft, use the software to capture an image and create a detailed mesh model overlay that can be viewed using a VR headset. The mesh model can be shared with robots and other devices.

GridRaster software integrates wirelessly and through the Cloud which means it can be used anywhere. A human user on Earth could work with a robot on the Moon to build a lunar base.

The software’s XR capability allows for rapid prototyping throughout the design and engineering phases of new product development. Dijam told me that 70% of manufacturing issues can be handled at the design phase which means incredible time savings.

Another feature produces photorealistic visualizations for technicians to use to train or do maintenance and repair.

And its mixed reality simulation capability allows product developers to do continuous testing throughout the design process.

I asked Dijam for examples of clients using GridRaster. He mentioned Boeing which is working to design a new aircraft in the metaverse. Two other manufacturers, Hitachi and Volkswagen, are using the metaverse the same way.

Another client, the US Department of Defence (DoD) is using the metaverse to help with its aircraft maintenance depot operations. DoD is deploying robotic arms at these depots. Using GridRaster, what used to take hours to program a robot now takes 15 minutes.

GridRaster works with off-the-shelf VR headsets like the Microsoft Hololens. With the headset, the user sees a detailed mesh model that serves as a virtual 3D map. Users can instruct or guide a robotic arm to complete a task. In the case of the DoD depot application, the robots are doing painting, grinding, and swapping out and replacing defective or damaged parts for aircraft.

The mesh model using Hololens provides millimetre-scale accuracy. For surgical and medical laboratory applications, the software can visualize at a micrometre scale.

The last example Dijam shared was the US Space Force (USSF) which is using GridRaster with its project to build and deploy space robots to deal with the problem of orbital debris. USSF’s goal is to have human operators on Earth work with robots in orbit to target trash and give it a deorbiting push so it burns up in the atmosphere rather than continue to be a potential hazard to working satellites and low-Earth orbit human missions.

]]>Our reality is becoming increasingly virtual with everyone governed by online social behaviours and norms. And online 3D games have moved us into the virtual space in ways many would have never contemplated. So much so that we are developing systems to provide us with an alternate reality where we act without fear of prosecution or judgement. This is a world we are calling the Metaverse.

The Metaverse: Changing How We Live

The term Metaverse was coined by Snow Crash author Neal Stephenson in 1992. It refers to a 3D digitized world. Long a subject in the virtual reality gaming community, the Metaverse is described by some as integral to our future, changing the way we live our lives. Replacing physical reality with a virtual one may sound like science fiction, but it’s already beginning to become a real possibility.

The sense of immersion in 3D virtual games today is getting better and better. That world feels more real than ever before. But will it become our main means of escape from reality much like television and movies today?

Metaverse: Virtual Travel

The Metaverse is paving the way for us to experience real or fictitious places from the comfort of our homes. Open-world games are a great way to escape the current real-world pandemic and the existential threat of global warming. One example of a virtual fictitious place can be found in the game, Red Dead Redemption 2 (see an image from it at the top of this posting). The virtual reality it provides is a pastoral experience representing a patchwork of different areas of the United States in the late 19th century.

With immersive games, we can visit islands and cities, travel through time or space, find ourselves deep in the jungle, or even create a virtual world of our own design?

Metaverse: Virtual Assets

Today, buying a gaming PC online enables us to do more than play computer games. It allows us to buy virtual assets. For example, in June of last year, Republic Realm purchased a virtual plot in Decentraland, a virtual world. The purchase done with non-fungible tokens (NFTs) was valued at $913,228.20. Several artists and investors have joined in this trend to acquire virtual properties on platforms paying with cryptocurrencies and NFTs.

Metaverse: Virtual Industries

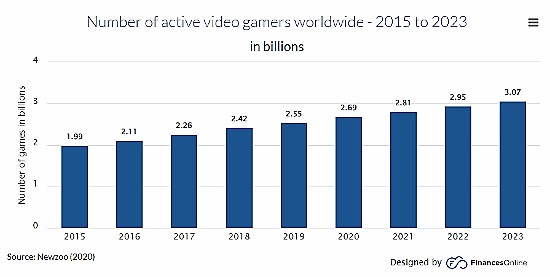

The online virtual world of the Metaverse is showing up in other ways. One can check out a house for purchase virtually. We no longer have to physically do a walk-through when we can do it virtually. Recently it was reported that 50% of U.S. adults on the Internet have taken virtual tours. And according to another survey, 63% of home buyers reportedly made offers on homes without seeing them in person.

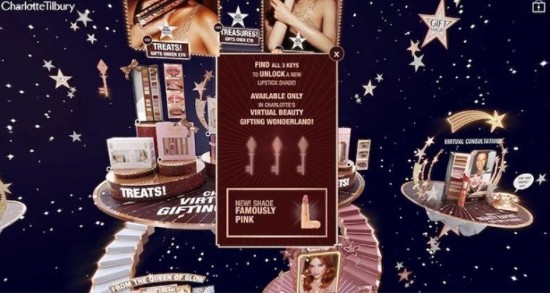

And it’s not just real estate where the Metaverse is becoming a part of our reality. Today beauty is one of several consumer-centric industries that are buying into the virtual world experience. One example is Charlotte Tilbury, which introduced its first virtual store in 2020. Fast forward to 2022, and now we can visit the company’s virtual stores and game-based islands. Called Charlotte Tilbury’s Virtual Beauty Gifting Wonderland, it is an online shop and virtual beauty consultation site and if a visitor finds three hidden keys on one of the virtual islands it unlocks an exclusive lipstick shade.

Metaverse: Virtual or Not

From what we have previously described it is becoming obvious that the Metaverse is blurring the definition of online gaming while at the same time creating an escapist alternate reality for Internet users to explore. Will more industries join in providing immersive virtual world experiences? Will automobile manufacturers create virtual environments for us to outfit and test drive our next vehicle? Will our jobs be immersed in the Metaverse and will we ever have to leave homes again to experience reality or rather live vicariously in the virtual one?

In 1909, E. M. Forster wrote the novella, “The Machine Stops,” where we interacted with the world by bringing it to us rather than going out to experience it. Was he foreshadowing the Metaverse? Will this be our future?

]]>

What is haptic feedback? It is the sensation of touch, one of our five senses. We experience it when using smartphones today. When you put your alert on vibrate, you can feel that someone is messaging you. And many smartphone applications provide haptic feedback as a feature.

But going from haptic feedback in a two-dimensional object to one that is three-dimensional and virtual is a “dimensional” leap. You can achieve this in several ways. One is aerohaptics, an air-based haptic feedback system to create a sense of touch without wearing any enabling apparatus such as a headset. Another is to use focused ultrasound to achieve a similar result.

The leap motion controller used by Professor Dahiya comes from Ultraleap. Ultraleap has a competing haptic feedback solution that combines small ultrasound speakers emitting precisely directed waves sequenced by computer algorithms to arrive at the same point in space and time to create tactile effects that a hand can detect in mid-air. In other words, virtual touch.

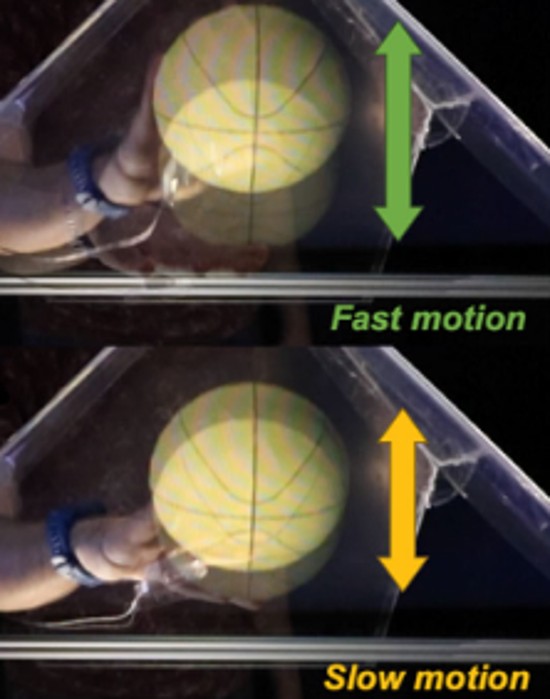

Aerohaptics does it differently by involving the pairing of 3D-display technology with directed airflow to create virtual touch. The University of Glasgow’s Bendable Electronics and Sensing Technologies (BEST) research group came up with the idea.

Their demonstration is a holographic bouncing basketball hovering in space that anyone can interact with. The aerohaptic feedback lets participants feel the virtual ball as it rolls off their fingertips. They can bounce it, slap it against their palms, and apply varying force and direction to where and how it goes. An illustration of the developed system can be seen at the top of this article.

Using airflow and varying its intensity is a novel approach to creating a virtual sense of touch. As you attempt to bounce the basketball using harder and faster movements the haptic feedback increases. More subtle movements cause the airflow to diminish and the ball to move appropriately.

So which is better? Ultrasound haptics or aerohaptics?

The advantage goes to the latter over the former for two reasons.

- Although both deliver mid-air haptic feedback without having to wear anything, pressurized airflow as a feedback mechanism is far less expensive than a raft of ultrasound speakers controlled by advanced computer algorithms.

- And pressurized airflow allows for better scaling to a much larger space without the complexity and cost of multiple ultrasound speakers.

Professor Dahiya sees the technology as a precursor to the Trekkian holodeck. He notes that aerohaptics creates “a convincing sensation of physical interaction on users’ hands at a relatively low cost….adding temperature control to their airflow to deepen the sensation of interacting with hot or cool objects” would be the next step and is on the drawing board. But he doesn’t have a solution yet for his holodeck virtual objects displaying sentience and following plot lines.

The future uses he does see “could form the basis for many new applications…such as creating convincing, interactive 3D renderings of real people for teleconferences,” or “it could help teach surgeons to perform tricky procedures in virtual spaces during their training or even allow them to command robots to do the surgeries for real.”

]]>